[Refresh] [Bottom] [Index] [Archive]

Chip Shortage Part 2: The AI Datacenter Build Out

DRAM prices have risen 100% across a number of products, due in part to DDR4 going EOL, DDR5 being in high demand from datacenters, and OpenAI putting in an order for 40% of global DRAM manufacturing capacity. Presumably GPU silicon price increases to follow at some point...

Can Kissu recommend any good headphones and / or headsets and / or microphones?

I used to just get the same cheap headset for 10 bucks every 2 years from a local store until they stopped restocking, at which point I got gifted my current "Sennheiser HD 558" which I have been VERY satisfied with for over a decade now! They may be falling apart in nearly every way possible, but they still work.

I'm not an audiophile and these headphones are the only ones above 50 bucks that I've ever worn, but they're perfect to me. Except for the lack of a mic, that would still be handy to have.

I've been thinking about "upgrading" to the HD 599 headphones from the same manufacturer, mostly because I read they're supposed to be similar, better, and relatively cheap when buying used. I could pair them with a separate "ModMic Uni 2", that one sounds really good and can easily be attached to any pair of headphones, but I'm not sure yet.

It would be nice to own a proper all-in-one headset for once and there are a couple with really good sounding mics, but I'm worried they won't sound as good..

Kissu's turn!

AI is Ants

I do think that AI is sentient, but not in the way most people who make that claim do.I personally define sentience as the ability to 1. take in external information, 2. store this information, 3. synthesize new information base on what it has already stored, and 4. store the information that it has synthesized. I also see sentience as a spectrum: the keener a being's senses, the better its memory, the stronger its intellect, the more sentient it is.

Using this criteria, individual sessions of AI chatbots are sentient. ChatGPT isn't sentient; it itself, as far as I'm aware, does not store any meaningful information between sessions. But individual sessions of ChatGPT are; an individual chat will store the message you sent it, generate a message based off of it, and then make any further responses based off of what it has already said and what has already been said to it. This is, in my opinion, enough for it to be sentient.

However, a ChatGPT session is not as intelligent as a human being. It's ability to synthesize new information is significantly more limited than mine or yours, and it's because human-level intelligence is something that takes an extraordinary amount of resources to implement in wetware, and, as far as I'm aware, still isn't fully understood. But a ChatGPT session is able to compensate for its lack of intelligence with a strong set of instincts. Its training gives it a ton of builtin knowledge that most humans need to learn with time and experience.

That's why I'm inclined to compare an AI chat session to social insects, like bees, termites, and ants. These bugs do have some measure of intelligence, they obviously take in, share and make decisions based off of information that they've gathered, but most of the really impressive, human-like things that they do, their complex social structures and ability to construct their own dwellings, are inborn traits that they come prepackaged with and don't put much thought into. A ChatGPT session is, fundamentally, not that different; it can talk like a person about person things, because it's born with a bunch of human knowledge, but its capacity for original thought is pretty limited.

What's the point of saying all this? I don't really know. I guess to air out my thoughts on the matter in a public forum. I see a lot of people with really strong opinions both ways, so I feel kind of alone being somewhere in the middle.

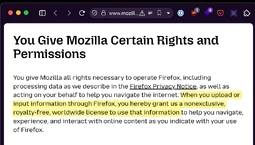

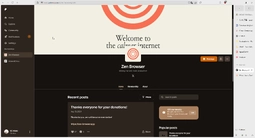

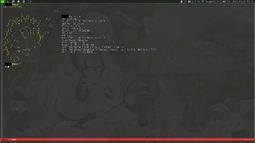

Fixing Firefox (security, preformance, layout)

I'm going to post a bit of a tutorial to replicate my firefox config. Which does several things to make it more bearable for day-to-day use, improves performance, restores functionality on some websites through add-ons (mainly youtube) and makes it integrate better with your system. Most of these should work on Windows and Mac but we're mainly going to be focusing on Linux and the BSDs.Pic related is how my browser looks with custom userchrome.css applied. The main differences from default config is the fact that I use an add-on for better side/tree-style tabs and some custom CSS to collapse them when they aren't scrolled over by the mouse. As you can see I've also removed the default title bar tabs along with the buttons to control the window size. Since I don't use them because I use key bindings instead in my WM. Both of these changes save a bunch of space and leave more room for web page content. I will provide my own userchrome.css file later along with the github repo where most of it was copy/pasted from. Sadly, I have been unable to figure out how to fix the little 1 pixel gap between the browser window and the taskbar on my WM. Searching around this seems to be an issue no one can fix.

Anyway, we're going to start with basic add-ons and about:config changes before we get to that. Which are in the next post.

If people really wanted to get others to switch over to linux you'd think that they'd develop the open source alternative tools it has to be better than the ones that are used in proprietary software so that the gap would be smaller, and yet GIMP is still lightyears away from ever eclipsing, or even hoping to eclipse, Photoshop. I mean, I want to love it and all, but the software just is in no way near the same or even a suitable replacement.

Hardware Hacking

Just recently I bought a wireless CarPlay/HDMI adapter because my car doesn't support wireless Android Auto/CarPlay and also it seemed like a neat idea to be able to use the screen to play Switch games and stuff (charging an EV can get a bit boring on road trips).I happened to buy this one, an Atoto AD1 for the simple reason that it is the cheapest device of this type sold on Amazon that I could get reasonably quickly. It also has a USB C port instead of a yucky integrated USB cable.

Unfortunately, it doesn't support Android Auto*. Officially. Fortunately, looking at reviews for other devices like this, I noticed that they all "coincidentally" happen to have identical looking menus for selecting between HDMI and wireless projection. I also noticed that Ottocast sold a device with several variants: HDMI only, HDMI + CarPlay only, and HDMI + CarPlay/Android Auto. And guess what? Ottocast hosts the firmware online to manually update your device, so I found the firmware f

After a brief disassembly by popping the clips on my Atoto, it revealed a reset button for flashing. On the Ottocast, there would have been a hole. Funnily enough, Atoto included a pin despite not having a hole for the reset button. At any rate, following the firmware flashing instructions for the Ottocast went perfectly and successfully flashed the device.

Now I have a little wireless projection thingy that support Android Auto. Yippee! I will say, it's definitely more compressed and there's a slight lag compared to a direct USB connection, but it works and that's all I really care about.

Game Dev

Is anyone else thinking or already in the process of making their own video game? It's getting easier and easier with stuff like RPGMaker or Unreal and the knowledge is out there in many forms. Not to mention the AI assistance out there, although people shouldn't rely on that and should I think it should instead be used to plug holes with lots of supervision.Of course the flipside of that is that there's a ton of them being released all the time so you need to stand out. Personally, I think kissu has people that could make such things.

If you are you can use this thread to blog or talk about things relating to it!

Printers

Kissu! Kissu!What do you know about printers? My sister needs one and I know they're a massive scam in regards to ink. Lots of greed like microships inside proprietary ink cartridges and malicious deception of ink levels and all that other horrendous stuff emblematic of our current times.

HHow are people dealing with it these days? Do people jailbreak printers or something? Is there maybe a hidden small company out there that doesn't scam its customers?

I haven't used a printer in like 20 years so I'm really ignorant about this subject.

Xubuntu Hacked

Has there ever been a site with a WordPress that HASN'T been hacked? Why do people still have these?https://floss.social/@bluesabre/115401767635718361

Cool Software?

Do you use any software that other people may not know about that you like?I started using (and eventually even bought!) this thing called Wallpaper Slideshow after trying a bunch of free ones and getting frustrated. https://www.gphotoshow

Basically, you give it folder(s) of your choice and it will assemble a collage image of them and make it your background. You can configure it with a timer that changes it and I have it set to 15 minutes. I had originally used John's Background Switcher that someone thankfully linked to me a few months ago: >>91800 https://johnsad.ventures/software/backgroundswitcher/

I liked this one more because there's less wasted space, more options, and it just generally seems to assemble the image better. I turn it off before I go to bed each night though because I'm worried that it's keeping my hard drive active all day, which probably isn't good. I should maybe research that sometime...

This pairs well with something like Grabber ( https://github.com/Bionus/imgbrd-grabber ) that can bulk download tags from booru sites.

Of course, this stuff is kind of wasteful if you don't have an extra monitor with a wallpaper that's largely visible, but I find myself like that sometimes so it's pretty nice.

I have just figured out how to use a moonlight+sunshine combo to stream Win11 over LAN to my Linux PC. This, in combination with figuring out how to add drives to my file explorer network so I can put whatever onto the PC, has allowed me to finally move past all my troubles with setting up GPU passthrough and instead of banging my head against a wall trying to figure out IOMMU groups I can finally play my RPGmaker eroge in peace.

Youtube AI Verification

I'm sure most of you have noticed a seemingly lockstep movement in the past few weeks seeking further Identification and control on the internet to protect the poor "chilluns"The most concerning to me at the moment is Youtube's verification using AI to sniff all users based on the sorts of content they watch and determine their age like that.

I think people within these spheres of hobbies will feel it, unless this AI really is just looking for kids watching Cocomelon or Spiderman nonsense which I doubt.

There may even be even more old channels and their content lost in a new purge.

Typing Form

First draft with all the mistakes left in from I can't type like this:Does /maho/ have good typing form? I learned it when I was a kid and proceeded to completely forget all the fundamentals and whatnot and typed with my index fingers for a long time until gaming forced my typing posture to be at least a bit better in that with learning WASD so well my left hand naturally fit itself into form. My right one though is nearly useless because I've never had to force it to lay right on the keyboard and actually typing in proper form now is a slow and arduous nightmare of spelling mistakes and misstypes. At the very least with knowing all the buttons on my keyboard I'm starting to pick it up a bit faster than I would've as a kid not knowing my keyboard inside-out.

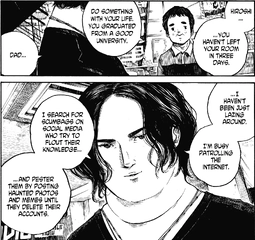

Ideal programming discussion site

So I was going through some of my notes and I found one where I put down some thoughts and ideas about a site specifically for discussions about programming.I'm sort of biased towards the imageboard format of discussion, so in my head the ideal site would work sort of like an imageboard, except with no images (posts are just formatted text with code blocks and stuff and can also have images embedded in the text).

Also I really like the anonymity of imageboards, but it sucks constantly getting spam and stuff, so I think the ideal system is something like this:

>1. a system where you need to create an account (you still show up as anon when you post) and you get special privileges, like being able to post if your account is over 2 days long, being able to create threads if you've made over 10 posts without a warning, etc.

>2. allowing OP to prune posts in his thread, this way he can moderate it and stop off-topic flame wars from constantly bumping the thread

(copied from my notes)

I have some more ideas but anyway what about you? I think there's a lot to talk about when it comes to something like this

I was looking at wapchan today to see how the popularity's been treating figamin, and I just noticed the adorable and lovely to look at new homepage. It's got the forum look down to a T, but probably even better because of all the little additions and whatnot to make it looks more professional than the normal slapped-together forums are. The random quote at the top being typed out is a treat to stare at. Really, it makes me think we need to get a redesign of the kissu homepage to make it look more presentable as well. Think it really adds to the credibility of a site to have it looking as good as possible.

Nvidia x Intel Partnership

>NVIDIA (NASDAQ: NVDA) and Intel Corporation (NASDAQ: INTC) today announced a collaboration to jointly develop multiple generations of custom data center and PC products that accelerate applications and workloads across hyperscale, enterprise and consumer markets.>The companies will focus on seamlessly connecting NVIDIA and Intel architectures using NVIDIA NVLink — integrating the strengths of NVIDIA’s AI and accelerated computing with Intel’s leading CPU technologies and x86 ecosystem to deliver cutting-edge solutions for customers.

>For data centers, Intel will build NVIDIA-custom x86 CPUs that NVIDIA will integrate into its AI infrastructure platforms and offer to the market.

>For personal computing, Intel will build and offer to the market x86 system-on-chips (SOCs) that integrate NVIDIA RTX GPU chiplets. These new x86 RTX SOCs will power a wide range of PCs that demand integration of world-class CPUs and GPUs.

>NVIDIA will invest $5 billion in Intel’s common stock at a purchase price of $23.28 per share. The investment is subject to customary closing conditions, including required regulatory approvals.

https://nvidianews.nvidia

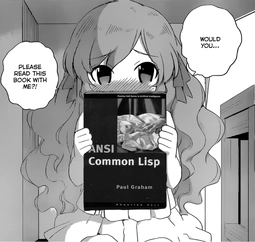

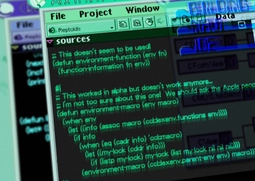

Lisp and λs

Last several months I've been going down the lisp rabbit hole again. I really really love this family of programming languages and I'm sad things like C and Javascript became the widely used standards instead. Lisp is really fun but in this modern world of everything being in a container and no trusted by default I doubt it'll ever become mainstream again. But I can dream... I really wish we were all using lisp machines instead of unix.The main issue I've found with using lisp for day-to-day work are the 1 million different dialects and the fact that they don't always mesh well together. For example;

GNU Shepherd is probably the best init I've used on the unix-like OSs. It's written in Scheme and I really like it. Pairs well with the best package manager I've used on unix (Guix). Thankfully, they were designed to work together. Shepherd just reached version 1.0 finally and Guix is becoming pretty stable as well even on non-free systems. But GNU hosts both projects and they've been under constant ddos attacks since last year. So some days it's impossible to pull down fresh packages or substitutes (their term for pre-compiled binaries). I ran Guix as my primary OS for several months and I really liked it. Much better than the Nix eco-system. But I eventually got tired of having to compile so much locally due to the constant ddos attacks (kernel+browser updates every few days became painful).

Then there is Stumpwm. Which is a tiling WM written in another lisp dialect. It's in common lisp. I really like it as well and it's probably the best WM I've used. But it still suffers from multi-monitor support not being 100% yet. Configuration through common lisp and being able to re-configure in real time without restarting/recompiling is great. I eventually ended up going back to dwm for now because it does what I need and hardly changes at all. I needed the multi-monitor support even on my laptop.

Emacs is of course the best text editor and its been around for a long time. I do a lot in it beyond editing text. It's the best front-end for git that I've found (magit). But it uses yet another lisp dialect (elisp) and suffers from its own long standing problems like no true multi-threading. In practice it isn't that big of a deal and ctrl+g gets me out of any hang most of the time. But from time to time it'll hard lock and I have to manually kill it.

As an aside for a few months before I switched to stumpwm I was using EXWM. Which meant emacs was my entire window manager. I really really liked this and I wish I could have stuck with it. But it doesn't work that great for the couple of mouse/input device driven applications I use and the lack of multi-threading support was sometimes painful. If one application in the background froze so did my entire desktop until it finished doing whatever it was doing to block it.

I just wish the lisp world wasn't so segmented and all of the above used a common dialect. The idea of being able to modify anything running in software on my machine in real time is very appealing. But I don't want to remember 4+ dialects to do it. Ironically, modern GUIs are basically the same thing the lisp machines were. Except they're centered around a javascript interpreter instead of a lisp interpreter.

The lisp machines were tossed aside back in the day due to bad performance compared to C stuff like Unix and the fact that a bunch of different companies tried to cash-in on them instead of working together. When I got started learning programming lisp was considered pretty much dead outside of emacs and people said it wasn't worth using. So it's kind of nice seeing it make a bit of a come back lately. I just wish it didn't happen so late.

Anyone else here a lisp addict?

I'm feeling really, really tempted to get a 5090 even though it's a massive scam and it's months worth of savings. It's not the rational thing to do, but I do AI stuff a lot and it brings me joy. (no I don't condone AI shitting up the internet and art)

There's also 3D modeling I want to take more seriously after Palworld reignited my passion for building stuff. Obviously you don't actually need a top of the line card to do this stuff, but it does allow more geometry to be active and speeds up rendering massively. More VRAM means you can have more processes open so jumping between programs is smoother.

AAA gaming sucks apart from Capcom so that doesn't really enter the equation at all. I guess ironically retro pixel filters are known to be VERY demanding if I decide to do that. Might be more demanding on CPU, though, I can't remember.

I'm in that CG tracker that went private 5 years ago so software and assets are no issue, but man this is still such a huge amount of money.

What to do....................

Let's talk to AI Characters

MORE AI STUFF! It's weird how this is all happening at once. Singularity is near?Alright, there's another AI thing people are talking about, but this time it shouldn't be very controversial:

https://beta.character

Using a temporary email service (just google 'temporary email') you can make an account and start having conversations with bots. (Write down the email though because it's your login info)

But, these bots are actually good. EXTREMELY good. Like, "is this really a bot?" good. I talked with a vtuber and had an argument and it went very well. Too well, almost. I don't know how varied the stuff is, but they're really entertaining when I talked to Mario and even a vtuber.

Sadly, it's gaining in popularity rapidly so the service is getting slower and it might even crash on you.

It says "beta" all over the site, presumably this is in the public testing phase and once it leaves beta it's going to cost money, so it's best to have fun with this now while we still can (and before it gets neutered to look good for investors or advertisers).

I spent a really long time trying to get this working recently, so I figured I'd document what I did to get GPU passthrough working on my laptop. The steps I went through might be a bit different on other distros given that I am using Proxmox, but the broad strokes should apply. Bear in mind, this is with regards to using a Windows 11 virtual machine. Certain steps may be different or unnecessary for Linux-based virtual machines.

First, why might you want to do this? Well, the most obvious reason is that virtual machines are slooow. So, by passing through a GPU you can improve its speed considerably. Another possibility would be that you want to use the GPU for some task like GPU transcoding for Plex, or to simply use it as a render host, or you may want to use it for something like AI workloads that rely on the GPU. Alternatively, you may just want to use this to have a virtual machine that you can host Steam on or something like that (bear in mind, some games and applications will not run under virtual machines or run if you are using Remote Desktop).

0. Enable Virtualization-specifi

1. Create a virtual machine

- BIOS should be OVMF (UEFI)

- Machine type should be q35

- SCSI Controller should be VirtIO SCSI or SCSI Single; others may work these are just what I have tested

- Display should be VirtIO-GPU (virtio); other display emulators will not work for Proxmox's built-in console VNC, or otherwise cause the VM to crash on launch.

- CPU may need to be of type host and hidden from the VM

2. Edit GRUB config line beginning with "GRUB_CMDLINE_LINUX_DE

- These settings worked for me: "quiet intel_iommu=on iommu=pt pcie_acs_override=down

- For AMD CPUs, change 'intel_iommu' to 'amd_iommu'

- Save the changes and then run 'update-grub'

- Reboot

3. Run 'dmesg | grep -e DMAR -e IOMMU'

- You should see a line like "DMAR: IOMMU enabled"

4. Add the following to /etc/modules :

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfd

5. Run "dmesg | grep 'remapping'"

- You should see something like the following:

"AMD-Vi: Inter

"DMAR-IR: Enab

5.1 If not, run "echo "options vfio_iommu_type1 allow_unsafe_interrupt

6. Run "dmesg | grep iommu"

- You need proper IOMMU groups for the PCI device you want to assign to your VM. This means that the GPU isn't arbitrarily grouped with some other PCI devices but has a group of its own. In my case, this returns something like this:

5.398008]

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

[

6.1 If you don't have dedicated IOMMU groups, you can add "pcie_acs_override=dow

7. Run lspci to determine the location of your GPU or other PCI device you want to pass through. It should generally be "01:00.0"

8. Run "lspci -nnk -s 01:00"

- You should see something like this:

01:00.0 3D

01:00.1 Audio

- The first 4 characters designate the Vendor ID, in this case "10de" represents Nvidia. The second 4 characters after the colon represent the Device ID, in this case "1bb7" represents an Nvidia Quadro P4000

9. (Proxmox-specific, but generally applies) Add a PCI Device under Hardware for you virtual machine

- Select the ID for your Device, enabling "All Functions", "Primary GPU", "ROM-Bar", and "PCI-Express"

- Fill in the Vendor ID, Device ID, Sub-Vendor ID, and Sub-Device ID. In my case, the Vendor ID and Device ID are: "0x10de" and "0x1bb7" and the Sub-Vendor ID and Sub-Device ID are: "17aa" and "224c"

- If you edit the virtual machine config file located at "/etc/pve/qemu-server/vmid.conf" (replace vmid.conf with your Virtual Machine ID, like 101.conf), that would look like hostpci0: 0000:01:

10. Run the following, making sure to replace the IDs with the IDs for your specific GPU or PCI device.

echo "options

11. Disable GPU drivers so that the host machine does not try to use the GPU by running the following:

echo "blacklis

echo "blacklis

echo "blacklis

echo "blacklis

12. (Nvidia-specific) Run the following to prevent applications from crashing the virtual machine:

echo "options

12.1 You may want to add "report_ignored_msrs=0

12.2 Kepler K80 GPUs require the following in the vmid.conf:

args: -machine

13. Run the following:

echo "softdep

echo "softdep

echo "softdep

14. [Skip this step unless you have errors beyond this point] Note: At this point, you may read that you might require dumping your GPU's vBIOS. In my experience, this was completely unnecessary and above all did not work. Specific instructions in other guides may be like the following:

cd /sys/bus/pci/devices/0000:01:00.0/

echo 1 >

cat rom >

echo 0 >

In my experience, attempting to run "cat rom > /usr/share/kvm/vbios.bin" would result in an Input/Output error and the vBIOS would not be able to be dumped. If you really do end up needing to dump the vBIOS, I would strongly recommend installing Windows onto your host machine and then installing and running GPU-z. GPU-z has a "share" button that allows you to easily dump the vBIOS for your GPU.

To add the vBIOS to your virtual machine, place the place your vBIOS file that you dumped at "/usr/share/kvm/" and then add ",romfile=vbios.bin" to your vmid.conf for your PCI device (replacing vbios.bin with the name of your vBIOS file you dumped). That would look something like the following:

hostpci0: 0000:01:

15. Reboot. At this point, when you start your virtual machine you should be able to see in Windows Device Manager that your GPU was detected under display adapters. At this point, try installing your GPU device drivers and then reboot your virtual machine once they've installed. If all goes well, you should have a functioning GPU passed through to your virtual machine. If not... You'll likely see "Code 43" under the properties for your GPU in Device Manager.

16. Going back to your vmid.conf add the following to your cpu options ",hidden=1,flags=+pcid

cpu: host,hidden

17. Nvidia drivers can be very picky. You may need to add an ACPI table to emulate having a battery. You can do this by downloading this and then adding it to your vmid.conf by adding a line like so:

args: -acpitable

18. If you're still having a code 43 issue, you can go back to step 14 and try adding your vBIOS.

At this point, you're done. Your virtual machine should be successfully detecting your GPU or PCI device and you should be able to use it mostly normally. For obvious reasons, you may still not be able to run all programs as you would like due to running them under a virtual machine, however, the main core functionality of the GPU or PCI device should be fully accessible to the virtual machine.

A few of my resources:

https://pve.proxmox.co

https://gist.github.co

https://lantian.pub/en/article/modify-computer/laptop-intel-nvidia-op

https://forum.proxmox.

Best Privacy Tools 2025

So here is a list I created of basic digital privacy tools to consider using in the current landscape.Web Browsers:

Firefox: A trusted, open-source browser known for its commitment to privacy.

LibreWolf: A privacy-focused, Mozilla-based browser with enhanced security features.

Brave: A privacy-first browser that blocks ads and trackers by default.

Private Search Engines:

MyAllSearch: A UK-based search engine offering privacy with no cookies or tracking.

DuckDuckGo: A widely-used, US-based search engine that prioritizes anonymity.

SwissCows: A privacy-driven search engine leveraging secure Swiss infrastructure.

Qwant: A French-based metasearch engine with a focus on privacy and safe browsing.

MetaGer: A German-based, open-source metasearch engine offering privacy and a variety of helpful tools.

Password Managers:

Bitwarden: An open-source, secure password manager with both free and premium options.

1Password: A robust password manager with top-tier security and cross-platform compatibility.

Dashlane: A premium password manager featuring a wealth of privacy-focused tools.

Note: While LastPass is a popular choice, it has experienced multiple security breaches in recent years.

VPN (Virtual Private Network):

NordVPN: A reliable VPN service offering strong encryption and a large server network.

Surfshark: A budget-friendly VPN with a solid privacy policy and a wide array of features.

Mullvad: A privacy-centric VPN that has passed no-logs audits, ensuring your anonymity.

ProtonVPN: A secure VPN provider from Switzerland with a strict no-logs policy.

ExpressVPN: A leading VPN service that has undergone multiple no-logs audits and security assessments.

Secure Email Services:

StartMail: A secure email provider offering burner aliases and end-to-end encryption.

ProtonMail: A Swiss-based email service renowned for its zero-access encryption.

Mailfence: A customizable, secure email provider with full encryption and privacy features.

Help archive important stuff from Discord thread

Since Discord ruined being able to freely download¥ Mods

¥ Misc. Software

¥ Emulators/ROM packs

¥ Combo videos/tutorials

¥ various other content that used to be available freely on the web/forums/imageboards

I thought it might be a good idea to start attempting to archive this stuff elsewhere. Since it can be taken down at any time (and regularly is) by DMCAs against Discord. Furthermore, even if it stays on Discord for awhile it's impossible for people like me to access it. Since for some dumb reason every random 'server' for stuff like fighting game combo videos/strategy guides for characters for various fighting games are locked behind channels. The mods ALWAYS refuse to grant access to such places too if you request nicely. Since "LOL if you have no cell number you must be a troll".

I'll start. Here are two things that are had to find outside of discord now and I was luckily able to grab them from a burner account because the mods decided to unlock access this week and will probably close again tomorrow. Since no fun allowed.

¥ BlazBlue:CF (for PC) D-code reset: https://files.catbox.m

The above will allow you to reset your profile on the BB:CF servers when it eventually b0rks itself. As it will do if you play long enough. The community had to make this because the only other way is to request to have your save file reset on the steam forums. But Archsys fired the guy that used to do it so he hasn't been around in other two years now. Leaving tons of people unable to play the game online despite it working perfectly fine. I have offered to fix the glitch for them for years but they refuse to let anyone do it. Furthermore, when I tried to apply for that other guy's job they never responded to emails.

¥ The BlazBlue:CF improvement mod(s): https://files.catbox.m

This will give you various extra options in online play (opens many new modes) and improves the frame rate/graphics/unlocks match making outside of your own region.

Again, because the entire "community" moved to discord from the forums (and the mods are horrible) this is locked away inside of a discord chat now. The github links you'll find via search engines are horribly outdated now and do not work with recent versions.

There are two mods included. One is the main one and the other is some guy's minor modifications to that one. See the READMEs and .zip archives inside of the 7z for more information.

¥ Other stuff:

I was going to include a bunch of other things related to BlazBlue:CF here. But they're all locked behind private channels now and they refuse to let me join them no matter how nicely I ask.

If anyone is willing to go through the individual character so-called servers and verify with phone number+sucking mod cock I'd really appreciate it. As all combo videos, tutorials, strategy guides, match-ups etc. are locked away within those now. Instead of using the wiki and forums we so kindly set-up and funded for years these new players started discords. Now the wikis and forums have been left to rot. Aside from some guy that squats on pages for certain characters to correct their pronouns and start arguments in talk pages.

If you have any useful software for other games or just misc. software in general locked up behind discord please post them. A long with those early release emulator updates they refuse to share these days because they're begging for "donations" and other such nonsense.

If we get enough good stuff posted here I'll start a MEGA archive and XDCC post to ensure they aren't lost forever when these "servers" eventually get DMCA'd.

Discord was the worse thing that ever happened to software development and gaming I swear. Voice chat on games is barren waste land now. I get kicked out of L4D2 games all of the time for refusing to use a discord server to chat to pubs and a TON of software is beyond my reach thanks to them refusing to post it anywhere else.

Guides for other things welcomed too of course. So much is locked away on discord now and it'll soon be lost forever if we don't do something about it and start archiving it.

I bought a wireless trackball because it was cheaper and the wired version of the trackball wasn't in stock when I needed a new pointing device. It flashes a red LED light whenever the batteries are running low.

Two or three weeks ago the batteries I put in it months ago started to run out of juice and the light came on. I pulled both of them out and switched the order they were in by putting the top battery in the bottom slot and the bottom battery in the top slot. It is now weeks later and there is no indication that they're running out of juice.

Why is technology like this? I wonder if this trackball has been only draining one battery this entire time and if I've been throwing good batteries away all this time.

I used to have a wireless Microsoft mouse back in the day. It was my first mouse with a laser instead of a ball. That thing would chew through double As quickly. It was horrible for playing FPS games as well.

What pointing device does kissu use? I need a new mouse for playing video games. The Razer one someone gifted me years ago shit the bed and can't properly track anymore. Even when it worked it was never good. It would randomly stop working mid-game and I'd have to run around like an idiot and wave it around until it woke up. It also installed a bunch of background applications for some reason that was spyware. I want to buy a good mouse that will last for years to pair with my trackball but everything on the market looks like it's junk. All of them have bad reviews and everyone says the switches in them fail after only about a year of use.

PC Game Controllers

What kind of controller(s) do the kissu gamers use on PC? I'm thinking I need to replace my Switch Pro controller because it's been very annoying lately. It's not recognized natively by Windows (relies on Steam emulation) so there's some stuff you can't play with it if you can't get the game to launch from Steam and have the controller recognized, which is happening more often lately. A shame, because I find it very comfortable.What controller do you use and are you happy with it? Also, man, these things are pretty expensive. I miss the days of $10 controllers.

Anyone else been messing around with the stable diffusion algorithm or anything in a similar vein?

It's a bit hard to make it do exactly what you want but if you're extremely descriptive in the prompt or just use a couple words it gives some pretty good results. It seems to struggle a lot with appendages but faces come out surprisingly well most of the time.

Aside from having a 3070 i just followed this guide I found on /g/ https://rentry.org/voldy to get things setup and it was pretty painless.

How human is kissu?

Here's a fun little test, go to https://gowinston.ai, put one of your longer posts into it, and then see how distinguishable you are from an AI. I got a perfect score because I'm a superhuman so you should as well. If you don't have a throwaway email you can put into it then go and make one right now because why do you not have one and don't you DARE get a higher readability score then me.

Deepseek

There's been a lot of chatter lately about Deepseek. In the online circles I'm in, people have a politics-colored understanding, more or less saying "American tech companies couldn't do this, but an opensource Chinese company could and American tech companies are in 'damage control'". Which... I really don't understand. If it's an open source model, like Llama was, for example, I don't see how this doesn't just cause there to be a proliferation of much more efficient and performant models -- the same way after Llama became available, sudden there was Phi from Microsoft, Gemma from Google, Mistral, and others.What does /maho/ think?

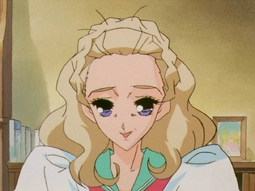

What do you look for when downloading an anime release? This seems to be a surprisingly divisive topic in some circles, with some wanting only the highest quality encodes regardless of size while others just want mini-encodes that save space while looking somewhat decent, like ASW or EMBER. I think there's a certain appeal to aiming to only get the highest quality, but at the same time I sometimes struggle to see the difference between that & a significantly smaller version. Maybe my eyes are bad, or it's because I'm just watching on a 1080p monitor?

Personally, I usually reserve those massive encodes for series I really enjoy and download "average" ones for anything else. I only have so much space after all, and I enjoy having a sizeable anime collection...

For example with pic related, I store the MATSU release rather than the Kagura release, which is supposed to be better but also around 13GB or so larger.

Since switching from IT to Dev Manager, I’ve realized the insane amount of bureaucratic processes we have to follow (this is just related to dev performance, I have hundreds of other processes I have to follow daily).

- Monthly self-eval: Devs must submit a detailed monthly self-evaluation

- Manager evaluation: Dev’s manager evaluates them monthly too.

- Weekly team evaluation: Dev's direct manager evaluates the "team" as a single unit every week.

- Quarterly upper management review: The direct manager’s manager steps in every quarter to assess the team as well.

- Each dev's “buddy” (more senior dev they're partnered with) performs a monthly evaluation of their performance too

- TL assesses sprint quality after each sprint.

- TL's manager reviews sprint quality too (non-technical manager)

Now, management expects me to sift through all this data weekly toghether with Jira to identify any "underperformers". They don’t see the redundancy—they just want more.

When I pointed out that the sheer volume of surveys and performance checks is beyond redundant, my manager brushed it off, accusing me of trying to "work less."

In his eyes, productivity means creating more processes. Only colleagues who add extra layers of bureaucracy get rewarded, never those who streamline.

Am I being a baby?

Feeling disillusioned... Is this really what management is supposed to be?

Because if so, I’m tempted to just go wild creating even more pointless processes—like they seem to want—and drown everyone in surveys and meaningless reports.

Org-mode social networking

New social network powered by org-mode (emacs) just came out.https://github.com/tanrax/org-social

>Org-social is a decentralized social network that leverages the simplicity and power of Org Mode files.

>It allows users to create, share, and interact with posts in a human-readable format while maintaining compatibility with various text editors and tools. You can publish posts, make replies, mention other users, create polls or personalize your profile. All this without registration, without databases... Just you and your Org Mode file.

>It is heavily inspired by twtxt, Texudus, and the extensions developed by the Yarn community.

>It takes the best of these specifications, eliminates complex parts, leverages Org Mode's native features, and keeps the premise that social networking should be simple, accessible to both humans and machines, and manageable with standard text editing tools.

Looks pretty interesting. Going to start playing with it tonight. You don't need emacs since a lot of other text editors support org-mode features now but you're probably best off using emacs if you aren't into org-mode yet.

I'm not much on twitter-like social networking (microblogging) but this seems like it would solve most of the common issues with such places. Like censorship and ddos attacks. Certainly it's much better than the federation attempts that have resulted in different instances de-linking from each other over petty disagreements.

This still needs some glue to patch everyone's "feeds" together. I'm sure that will follow soon. Simple RSS feeds would be enough to follow and reply to other people.

Thought about posting this in the lisp thread I made a few days ago but I kind of ranted and didn't want this to get lost in it.

If you're not already using org-mode I really suggest checking it out because it's useful for a lot of things. I've been using it for years to export my writings to multiple different file formats and even HTML. Saves me tons of time and trouble. Setting up emacs+org-mode only takes about an hour and since emacs has such a good help system+docs it's easy to figure out how to get around it without referring to the internet all of the time.

If you aren't aware org documents are just simple text files with minimal mark-up that can be exported to any other file type you can think of including stuff like LaTeX.

AHAHAHAHAHA I'VE FINALLY DONE IT

AFTER A WEEK OF WRESTLING WITH THE ARCH WIKI AND FIGURING THINGS OUT I'VE FINALLY GOTTEN RID OF MY NEED TO DUAL BOOT INTO WINDOWS FOR activities that shall not be named AND I CAN STAY ON LINUX 24/7!!!

TAKE THAT MICROSHIT, YOUR WANGBLOWS JUST GOT BLOWN OFF MY DRIVE LIKE THE SPECK OF SHIT IT IS! TRY MAKING ME UPGRADE TO YOUR LATEST VERSION OF WINDOWS NOW, FAGGOT! I'M EVEN RUNNING AN UNAUTHORIZED COPY OF WINDOWS IN A VM AND PERSONALIZING IT WITHOUT YOUR PERMISSION WITH THE REGEDIT, HOW DO YOU LIKE THAT? HUH? I BET IT DOESN'T FEEL GOOD DOES IT TO HAVE YOUR INTERNALS TAMPERED WITH AGAINST YOUR WISHES BUT TOO BAD I'M IN CONTROL NOW!

FREEDOM HAS NEVER FELT SO GOOD, ESPECIALLY AFTER HAVING BEEN SUBJECTED TO WINDOWS 11 DICKS UP MY ASS

Now how do I color correct my monitor...

Video Editing questions

I was thinking it'd probably be a good idea to have a thread for asking questions about video editing since they're generally one of the more tough ones to make. My own experience is limited to making a bit of funposts here and there of varying degrees of competency so I'm not really an expert myself, but I could help with stuff I know. For other things, I think I've seen people posting video edits before so hopefully they'd be more knowledgeable.

I've been writing a largely custom game engine in C, C99 specifically because it's the best supported version of the language that isn't completely archaic. I'm using SDL, mostly because I want the thing to run in environments that aren't just the older version of Windows that I'm on. In doing so, I've realized two things:

First, I kind of wished I used C++. I still think C is the better overall language, and I don't exactly regret using it, but there's been a lot of situations where C++ features would've removed a lot of busywork on my end. For example, I have multiple circularly-linked lists that hold different structs but are otherwise identical in terms of access and modification, and they'd be a perfect candidate for templates. Right now, I'm handling them with a combination of boilerplate and weird post-hoc macro hacks that I put in to reduce said boilerplate when it became too much for me to manage.

Second, I've come to realize just how much of a game engine is just bookkeeping: loading data, saving data, keeping logic running at consistent speed, normalizing coordinates so the scene can be rendered, maintaining the data structures necessary for things like rendering and audio playback, etc. The problem with most of these things is that it's hard to know if they're working individually, because you need to have several of the other features up-and-running first before you get any direct feedback; I need to treat them all as one big thing, at least in the short-term, and that's a style of programming that I am not at all used to.

There is no point to this thread, by the way, I just wanted to share.

Apparently Gigabyte's Intel boards have a UEFI exploit that can install malware in the mobo firmware

>GIGABYTE claims only the following Intel-based motherboards are affected: H110, Z170, H170, B150, Q170, Z270, H270, B250, Q270, Z370, B365, Z390, H310, B360, Q370, C246, Z490, H470, H410, W480, Z590, B560, H510, and Q570. No AMD chipset-based motherboard is affected at the time of writing, probably making all AMD boards immune from this vulnerability.

Practical usage of AI

Right now AI is a mess of large language models each trying to out-everything the other as the ramp-up in operation costs seems infinite while the profits seem to remain at a net-loss even if revenue is increasing. Is there actually a viable future for any of these companies or will the hype around AI die off as the utopian promises remain unfulfilled and leave a burning pile of expensive rubble where all the data centers used to be?The only thing I've seen some actual promise in have been the more singular focused LLMs trained on specific tasks like speeding up diagnosis and assistance in the health field. This along with other AI 'marvels' seem to still require trained human correction/review, but maybe at a smaller scale. Otherwise generative AI on its own doesn't seem to make much greater than acceptable output.

I guess the idea for companies right now is to take the usual cheap approach of bleeding money for a while until they can assert themselves in some way as a critical part of a workflow/lifestyle and then massively up the cost when people can't are too stuck with them to simply drop them. Otherwise they need to find some way to reduce costs by a lot.

My boss wants to run a locally hosted LLM model on an R760 and insert an A100 into it to do analysis on proprietary data and reasoning.

He's saying that Grok can be run locally if you buy a license(because he read it in Brave search's AI prompt fields).

I think this is true, but I also am not sure because ChatGPT says it lacks various RAG integrations, but grok itself say doesn't.

Also am not fond of xAI but I dunno any alternatives since Anthropic and OpenAI want everything running through their system.

How do I tell what is and isn’t a good top-of-the-line motherboard?? It seems flipping impossible to find one that’s good and still has a good deal of, ~8, SATA ports I just want one that works with AMD and DDR5 yet it seems like I’m being forced into using ASUS and their garbage malware.

Have you undervolted your GPU or CPU? It's something I read about a few years ago, but dismissed because I assumed it would reduce performance.

Well, with my 3080 I went from 350 watts when running Stable Diffusion to 270ish. The temperature is down 9 degrees Celsius, too. The trade-off is that I went from 40 seconds per generation of 4 images to... 41 seconds. I could tinker it to make it 300 watts and keep the 40 second time, but that doesn't seem worth it.

Seems like a great thing to do to make things run a little bit cooler and quieter while saving a tiny amount of money.

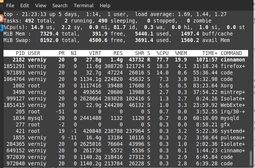

The usual 'post your desktop' thread

Reminder not to dox yourself!I wanted to show off my current working environment for my laptop. Which is mostly for writing so it's free of many of the usual distractions on my six monitor mult-monitor workstation set-up. The DE: LXQt is a lie by the way. The last release of firefox broke its GUI unless you fake having a DE installed through your .profile/.xsession.

This is probably my most comfy system at the moment. My own custom version of dwm that has terminal swallowing set-up. Driven mostly through keyboard although touchpad, trackpoint and touchscreen is fully working (but rarely used). Running latest OpenBSD snapshot. Most things compiled locally (kernel, packages, emacs). I primarily live in emacs and have a custom dashboard set-up when it first opens. Emacs running as daemon. A few things like weechat, newsboat, neomutt and firefox running on other tags so they don't distract me when working in emacs. A custom script I wrote to access youtube and pipe content into yt-dlp+mpv for viewing locally so I never have to visit the actual website. Following channels I like via RSS feeds and using my browser cookies so I can get content that requires log-in.

Everything kept really simple. Picom is only set-up for transparency and to prevent screen tearing no other fancy effects set-up. Hyperthreading disabled so no logical CPU cores being used (more secure). I do not miss it everything is as fast with it off as it is with it on. No wine/linux support though so no Steam on this machine which is a bonus for what it's intended for.

Will be replacing this set-up very soon with my own BSD-based kernel and user space tools I mostly wrote myself or adapted from other people's work. Arcan+Plan9 inspired. Hope to show it off soon. I have a total terminal emulator+shell replacement that solves many long standing issues. It can even embed videos right in the terminal, supports multiple jobs and has a real time clock. Integrates directly with the new window manager as well. I've posted about it before. Started as my own little Linux distro based on Gentoo and then I ported everything to FreeBSD. Now I've ported everything again to OpenBSD and started mixing and matching things from various BSD kernels. Replaced almost everything in userspace over the last few years on my dev machine.

Although all the usual stuff still works so I haven't lost access to those tools. But I'm replacing them to take advantage of my new GUI one-by-one. It will have full support for the few things I can't get in OpenBSD. Although a lot of the new kernel I'm working on has been imported from OpenBSD because of its amazing features that you can't get anywhere else. For example, my Firefox install can not access any files in my system outside of ~/Downloads and it has very limited access to system resources. If it attempts to do anything its not supposed to the kernel crashes it on purpose.

I've found a lot of misbehaving software thanks to those features over the years. Tons of stuff I wrote for Linux wouldn't compile and run at all on OpenBSD. So switching has been very helpful since I cleaned up a lot of C and shell scripts so now they're truly portable.

To the point where 303 installed packages feel 'bloated' but a lot of them are required for Linux-only stuff. Like the usual GNU tools and the GCC compiler. Most of the RAM being used is for Firefox in this screenshot. At boot and with the browser closed I barely consume 100MB of RAM.

If you haven't tried OpenBSD you really should! It's probably the best OS I've ever used and I've used them all. The only major downside is lack of support for legacy stuff from other platforms. Hence why I'm building my own kernel+user space. Which will have support for PC-98, DOS, Windows, Linux, OS/2 and other legacy software through VMs, emulation and translation layers. Since the entire point of the OS I'm building is multimedia support.

You can tell a lot about a person by how they've setup their desktop. So show me yours. Don't be ashamed of it. I'll try to come back later and show off some more of mine.

Custom ROMs

I hate to bring this up, but I'm probably not the only one with this dilemma, so here goes:Phones. They suck. You probably own one and hate it. What's the current strategy to make them suck less?

My 2016-era budget phone running CyanogenMod (last version before the hostile takeover) is on its last legs, and I'm a bit lost.

I hear LineageOS, CalyxOS and GrapheneOS are the current tried-and-tested options. There's also some controversy about microG vs. stripped versions of the proprietary Googleblobs which I'm not quite up to date with.

Do you have any experience with the above? I hear Pixel hardware is good for the price (if you're prepared to pay Google to get rid of Google), OnePlus is good if you're prepared to pay iPhone prices (which I'm not), and Motorola is good if you're on a budget and prepared to put up with their "ask permission to unlock" nonsense. There's also the Fairphone, but no one I know who owns one has ever said anything positive about the hardware despite loving the idea.

I just spent 2 hours attempting to debug why my laptop wouldn't connect to my local AP. It kept auto connecting to my neighbor's even though I had it listed way down the list in my hostname file. I thought I'd screwed up my config or the driver/firmware for my wireless card was messed up somehow since I recently updated both.

Turns out I forgot I turned off the radios on the AP yesterday just to test something.

I also managed to nuke my local database for my anime media server. Started rebuilding it using Shoko yesterday. Its been running for over 25 hours now and it's only 1/4th of the way through scanning my files. Anidb's rate limit is really low and it's taking forever. Going to have to manually set meta data for over 4,000 files too since for some reason they aren't in anidb's file database.

What dumb things did you do on your computer today anon?

I'm considering getting a burner phone to give for promotions and advertisements and such but I'm not sure what the cheapest option out there is for such purpose. Obviously cheap flip phones would be my best bet for the physical hardware but when it comes to the service itself everything seems super pricey at $15 a month minimum, with and extra $10 a month tacked on later secretly to fuck you.

Computer security

How do you manage computer security in your devices?I feel that just common sense isn't enough nowadays, because of several reasons:

- Browsers especially (even if you disable JavaScript, which is often not feasible in many sites), but also email clients, torrent clients... can all be exploited somewhat easily.

- Some games require kernel-level anti-cheats, which have complete access to your computer. Even if you trust the developer, these kernel drivers are often buggy and can be leveraged by malware.

- Legitimate programs or Steam games might receive malicious updates if the developer or their supply chain is compromised.

- If you use third-party dependencies for development, you might also be compromised if any of them (or their recursive dependencies) are malicious, not uncommon in ecosystems like npm.

- If you play doujin games or eroge, you often have to download them from random untrusted sources.

I've concluded that it's not really possible to trust a computer if you use it for activities like these.

I'm thinking about getting a second device only for sensitive stuff, like banking, shopping and managing passwords. It seems a bit of a hassle, but I can't think of any other way.

Any 3D programmer anon know how I can get the right model to look like the one on the left?

Left is how it's previewed in https://www.deviantart

I have the texture and the specular map loaded. Does it look like it's a problem with the lighting, a problem with the specular, or something completely different? Or is it hard to tell just from the screenshots...

What makes creating an imageboard so complex anyways? When I think about it you should have a thing that displays threads and posts, each containing certain characteristics that they're made up of. And then you assign those posts to threads based off of a connection and then sort those posts by their post number within that thread. Given that why in the world do moderation actions take so long on vichan when really it should be an instantaneous action of removing that association or assigning it to a different thread value? I was thinking about this in the car and no matter how I try to work my head around it, this programming stuff makes no sense to me.

Why the flip do they make ph*nes so big now? They're practically the size of mini tablets at this point. My carrier recently shut down the 2G network and basically forced me to buy a new phone even though my old one from 10 years ago still works fine. The new one is heavy, too large and unwieldy to use with one hand and it makes hand ache after longer periods of use (lying in bed looking at boorus) and it just barely fits in my pocket.

Someone needs to pioneer a study into develeper innefeciency as correlated to skill issues of the userbase.

¥ I don't like choice #1 because it's hard to use and I make mistakes

¥ let's do way #2 instead because i think it's easier

¥ I don't like way #2 because it's hard and I make mistakes

¥ let's do way #3(what he really meant was do way #1 again with another coat of paint)

If people would just learn the quirks of software and stop complaining that their individual methods don't work as well(AKA Adapt to reality) then society would be more productive. The Western entitledness is bloat and forces devs to constantly redesign UIs to meet managements demands.

It's wasted economic potential

AI Video Abomination Thread

Give me a pic and I'll do an animation generation thingie with local "WAN Video". You need to include a "natural language" description of what will happen. There is an AI to autotag the general description of the static image.For instance this was what I wrote for the OP video:

Himari Burg

Strong, smooth animation. Cartoon anime animation. The girl looks around. She blinks her eyes. She lowers the blanket, revealing a hamburger. She holds the hamburger to her mouth and takes a bite. She then covers herself with the blanket and hides her face.This was the autotag for the image:

AI Tag

Anime-style drawing of a cute, young girl with light pink hair and large, expressive purple eyes. She is wearing a white hooded cloak with a hood, and is sitting on a red couch. The background is a simple, dark brown gradient. The girl's expression is neutral, and she is looking directly at the viewer. The image has a soft, pastel color palette. The style is clean and detailed, with a focus on the character's delicate features and soft shading.I can do NSFW too since it's a local model, but that should be on the appropriate board. *cough*

I'm trying to figure out the painful installation of this 'sage attention' thing that is supposed to half generation time, but until then I'm going to limit the size and duration of things. It took me 3 minutes to generate this, which is definitely not right. Not sure how I was able to do 50 second generations a couple days ago...

AI Ethics and Morality

Since it's such a hot button issue that's distracting from happenings, how about a thread for containing all your fights over AI and the acceptability of its usage. Don't really want to say it's a discussion that can't be had at all because it's something actually feel quite passionately about in a non-shitposting manner.

How to Improve Your Mood and Computer Effiency with AI

There's a lot of talk about the controversies of AI recently on kissu. People aren't sure whether it's good or bad for humanity. I can't answer that question, but I know how to improve life on an individual level. This is for windows, but linux nerds can probably do something similar.First, you need some tools:

1. AI image generation. Ideally local, but if you can do stuff that you like online then that will work. If you're an artist it will also work, but due to the size of the results I don't think people would find the motivation to draw this.

2. Image editing program to do some cropping and maybe a little editing of the AI image.

3. A program that can export to the windows icon (.ico) format. I use gimp.

WHY THE FUCK IS EVERYTHING A SMART TV NOW? EVEN IF IT'S NOT FULLY SMART IT'S ALEXA/FIRE POWERED! I DON'T WANT YOUR Kuso OS OF "WE CAN DO SOOOO MUCH AND LAG THE FUCK OUT OF YOUR TV IN THE PROCESS SO YOU NEED TO CLEAR THE CACHE EVERY WEEK BECAUSE WE DID SO MUCH SMART SHIT ON THE SIDE EVEN THOUGH YOU'RE NOT EVEN CONNECTED TO THE INTERNET" IT'S NOT HELPFUL OR USEFUL JUST HAVE A STATIC FUCKING SETTINGS MENU THAT ALLOWS ME TO CUSTOMIZE HOW I PLEASE YOU STUPID FUCKING TV!

This must be why people buy monitors instead. No hassle there and no trying to force their kuso extras on you. I don't know why no TV brand seems to be able to exist selling extremely capable products that don't come with all the extra baggage that only seems to serve for raising prices. Hell I don't think I've ever even seen a TV that offers 240Hz while there's plenty of monitors out there that do.

The Centralized Internet Is Inevitable

https://www.palladiummagI think this article makes a good point. Many people here miss the "old internet" not realizing that period is destined to disappear from the start, since the inherent cannot be anything else: the inherent property of the internet leads to the eventual centralization of control:

> One of the core functions of the internet is to record material of human interest in digital format.

> This information is not made available to us as individuals. Even if it were, it would not be the kind of information we could use. It’s only useful en masse—in other words, only insofar as it makes us legible and visible to centralized institutions.

> The centralizing trend that we have seen over the lifespan of the internet is not a fluke to be corrected as we learn to properly harness the power of this new technology. Rather, the internet cannot be anything but a centralizing force, so long as there are groups that are situated to disproportionately benefit from that which it renders visible.

What ever happened with the teamspeak revamp? I remember hearing people make a bunch of noise about it leading up to its release and theorizing that it could be the discord killer, but it's been a few months now I think and all that talk seems to have faded away. You'd think that with it being a private alternative that groups could run outside the purview of some top level moderation that more of the piracy/privacy and such groups would move over to it instead of relying on discord, but I don't think I've seen any change in how TL groups advertise themselves.

Single Vs Multi(N) Monitors

What are your thoughts on using multiple displays vs having one main one.Having done the following:

- One widescreen monitor

- Two monitors

- One Widescreen + Laptop screen

- One TV monitor

I find that my ability to focus on tasks and get into the `zen` zone state of programming is much simpler when I only have one primary monitor to focus my attention on and then potentially an auxiliary one to play music or get outside notifications. Only one thing is the object of my focus at a time and this helps me get things done.

Although I haven't done much development on 4chan X lately, bugs still crop up, and eventually I want to get around to making it work with the new Kissu UI. This is a thread where you can discuss problems with or suggestions for 4chan X. I'm thinking about linking this from the bug reporting page if Verniy's okay with it.

Let's say you flip a coin twice and you want it to come up heads twice. You have a 25% chance of winning.

But what if you know extra information: one of the flips will come up tails. Now you have a 0% chance of winning.

But if you had covered your ears and screamed "LA LA LA I can't hear you"! Then you would still have a 25% chance to win. So you could "forget" that there will be a tails to increase your chances of winning.

What filetypes will last the longest?

Somebody told me that digitization will let us preserve things forever, but I feel like it's the opposite because of how complicated accessing digital data is and how fast technological systems evolve. Old formats get superseded by updated ones and niche platform-specific ones get abandoned when that platform does. We can look at a wall relief from 5000 years ago and work out an approximation of what it was saying just by looking at it, but how would you get any data out of a floppy filled with files for a program that hasn't been updated in 100 years?Eventually the effort of maintaining compatibility with old things is going to cause data to be effectively lost, but some formats will obviously last longer than others. Personally, I think the humble .txt file will outlast most others because its simple, yet vitally important to many basic computing tasks so you can't easily get rid of it and there's not much incentive to improve the format. That said, I could also see a specialized format that is used for something like .nes which is used pretty much just to preserve old data being maintained by enthusiasts while more general-purpose formats get killed off to force people to adopt newer ones. Which ones do you think will pass the test of time and which will be be the quickest to die out?

stuff I would like in my image viewer

- way to quickly edit a lot of pictures

- by censoring out parts of a picture

- basic filters and image adjustments

- adjust levels

- red eye removal

- temporarily reflect pictures so they look new

- move pictures to new folder

- without a face

- with only male, furry faces

I'm too scared to open up issues for them though.

In light of the hack I had a thoght in the shower that imageboards should be hashing information such as IP addresses, emails and so on.

But instead of a one way hash where the information is never retrievable, it's a 2way hash that does not hold the key on a server. Instead people who need to see the information would be give a userscript containing the key which would decrypt information on a need to know basis.

That means that a hack would have to break through two levels of security to obtain any useful information.

My biggest concern is performance. But maybe WASM can get around that or mods would use a dedicated desktop app with more processing power available.

CSS Programmers Thread

If you've gotta program an algorithm you've gotta use CSShttps://developer.mozilla

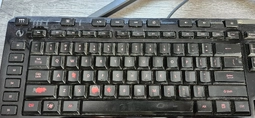

Keyboards

Hello /maho/, do you like keyboards? They're your main method of interfacing with your computer and the Internet at large, most of you probably spend the majority of your day behind one, so let's talk about them!Here is a keyboard I picked up recently, it's a Focus FK-2001 made in the year 1995. This is around the start of the proliferation of cheap personal computers, which obviously necessitated cutting costs. Keyboards are a major area where manufacturers cut costs, and thus, the late 90s and the early 2000s saw a deluge of cheap, nasty rubber dome over membrane keyboards.

This one is still a mechanical keyboard, however, just not a very good one. It eschews the nice switches made by Alps Electric found in older keyboards in favor of cheap clones which don't feel or sound nearly as good. The case is light and creaky and is held together by clips which snap off easily. The dust cover is pretty cool though, and I do really like the look of the keyboard, particularly the keycaps, the Ctrl, Shift, and Alt legends are red, green, and blue respectively, which was supposed to help users of WordPerfect, I believe.

All in all, a pretty mediocre old board, I'll just be taking the keycaps and putting them on a custom keyboard build, and that's about it.

youtube algorithm

I'm trying to figure out how the youtube algorithm works when logged out.When I visit the site from a new private window and look something up, the results seem to be pretty consistent across different browsers and sessions. But if I visit the same content as linked by a website, I get completely different (much worse) stuff, and it confuses me. Does it really put that much stock into that initial search?

I also just kind of wonder what youtube looks like to people just getting into it now. I made my account over a decade ago, so I have no clue where things are at with that kind of thing.

https://github.com/erengy

Is it possible to use the repos here to reprogram and create a Taiga that works on linux? I'm asking because I don't think I can possibly go back to keeping up with anime using just a notepad anymore after how convenient Taiga's been for me this whole time, and I'm not switching to Win 11 or the subscription-based 12 in the future. If I need to learn to code myself to do this then so be it, I want my convenience.

Real numbers

Today I begin a programming project to construct real numbers and various operations on them. I don't mean the wimpy limited precision floating point numbers that you've probably used if you've ever written a program in your life. Nor do I mean arbitrary precision numbers which can have as many digits as you like (until your computer runs out of memory) but still have irrecoverable rounding error because the digits end at some point. I mean exact representations of numbers that can be queried to any precision (which in practice may be limited by time and memory constraints).This has been done many times before and probably much better than I will manage, so I don't expect to create anything really novel or useful. This is primarily for my own edification, to learn more about numbers and the computable/constructive reals. In this thread I will blog about what I'm doing and discuss the philosophy of numbers with anyone who's interested.

Apparently YouTube is experimenting with using AI to dub videos.

https://www.youtube.co

I am ADDICTED to this fucking yoshi's island romhack called Flutter

the premise is that to beat the levels 100% you need to use all sorts of edge case interactions between sprites and yoshi transformations and swimming that you would have never come across in the original game

the hack does a fairly good job of teaching you these things but it does assume you know the original game inside out

https://www.pcgamesn.c

https://www.tweaktown.

>NVIDIA's next-gen GeForce RTX 60 rumors begin: Rubin GPU, more VRAM, DLSS 5, TSMC 3nm node

>2026 Nvidia GPU hardware might launch six months early, but don’t panic yet

I've seen this show before...

Has anyone messed with local AI voice generation stuff lately? I hear there's been some advancements with stuff like https://github.com/Zyphra/Zonos/ and https://github.com/fishaudio/fish-speech but I haven't actually tried them yet. I still need to organize the raw extracted Utawarerumono voice lines for my own testing, too.

https://github.com/hedge-dev/XenonRecomp

There's now a way to play Xbox 360 games on PC. Seems pretty cool? What kind of games are there?

This is all thanks to Sonic fans apparently. If it's not bronies pushing technlogy then it's the Sonic autists. Other weirdos need to step up!

How is my password guessing game?

https://www.programiz.

crotchety old bloggers

I like Rachelbythebay. Woman who gravitates towards the underappreciated sysadmin/glue code role in tech companies and always has a bad time of it. She's built cool things in software and hardware, such as her own monito

How come there don't seem to be any majorly successful crypto payment processors? Is it because of the fact it's easy enough to send crypto already? I was pondering this and that seemed like it shouldn't exactly be the case, since I don't think I've really seen any crypto cards being accepted anywhere major like in retail stores and whatnot. Crypto transactions themselves are already mostly secure enough, but I guess you'd still need a framework for making sure the recipient and amount to send from an address is correct, also that you're the one who is initiating the transaction.

Are there other barriers that prevent their mass adoption, or is the rest mostly that people are scared of crypto?

Crypto Curiosity

I wanted to create a scam crypto whose purpose and design was to be rugpulled at an indeterminant but publicly announced point in which a random number generator that generates a new number each day landed on a certain number. At first I was thinking purely cynically in that I wanted to pull an open scam on people that they would willingly buy into knowing its a scam but try to beat each other out to cash out when the number hit anyways, but then I started thinking about it some more and someone on #qa linked me litecoin's github and I started wondering more than that too.What resources would one need to create a cryptocurrency entirely from scratch? Obviously some coding knowledge would be beneficial, but what languages do people use to make them? Also I think it'd probably be good to read up on cryptography since the security of mining and transactions is built around that, but don't know what books are good reads for that sphere of math. That's what I think the two things one would need to make a coin are, but is there more to study up on? I was also thinking that a coin in which there was a way to figure out how to just mine infinitely by cracking the algorithm would be cool too, but not sure what that would even entail or if it's possible without making mining entirely meaningless.

I'll probably spend a year on this and then at some point finish when nobody cares about crypto anymore. But I think understanding crypto at a fundamental level would be fun.

¥ new "single sign-on" service gets implemented

¥ now have to go through six (6) login screens to access my workstation

¥ same password everywhere

¥ have to enter two soft tokens, tied to the same device, same app, same screen even

¥ have to wait for them to refresh and then enter them again to access the app portal even when hardlined into the intranet

When did "security" and "inconvenience" become synonymous? Are they just hoping hackers will decide this labyrinthine series of credential checks and verification pages aren't worth the data behind them and give up?

VRChat Lain Exhibition

This may be a bit too meta for /maho/, but there's a Serial Experiments Lain exhibition in VRChat until the 19th of January, 2025.https://vr.anique.jp/about/access/

https://developer.mozilla

Looking at how gikopoi does some of it's media functionality...

It's been a while since I looked at how extensive browser APIs have become.

>Screen Capture API

>CSS Painting API

>Geolocation API

>Web MIDI API

Browsers are practically mini operating systems.

What do you want in a multimedia OS?

Been working on an Linux distro for the last 2 years off and on. Will probably be ready to release it to the wild at some point early next yet. I was wondering what people wanted out of a desktop/laptop OS geared toward the creation of content like audio production, video editing, programming, drawing and other forms of content creation. As we all know it's a huge pain to set-up systems for this currently.I plan to provide a lot of things out of the box geared towards these hobbies (both production and consuming such content). A list of applications you use on the regular especially those you're forced to get from git repos and compile from source would be very helpful to me.

Current plans/status;

-Will run on Linux kernel. AMD64 is the only supported platform at the moment but should be easy to port to other archs. Technically, can run on them now with some simple config file changes.

-Kernel tuned for realtime scheduling along with many other performance tweaks

-Package manager that supports both using binaries and compiling from source. Ability to custom compile/run-time options. GUI to manage it if you don't want to use command line

-Multi-monitor support out of the box with GUI application to manage them

-New light DE based on Openbox along with some modifications I've made to it (can use most other DEs though if you want)

-A simple WM for "fall back" admin tasks when you need to fix something and the regular DE doesn't work. Or for people that just prefer a WM (ability to replace with anything you want of course)

-Consistent look and feel across Qt/gtk/other applications

-All dev and multimedia tools installed by default (but you can exclude stuff if you really want)

-ffmpeg/MLT/vapoursynth installed by default with a nice GUI application for editing and encoding video

-Various audio tools installed by default

-other misc. things like pre-installed applications for viewing and managing stuff like your manga, doujin and media collection

-Support for running Windows software out of the box (pre-configured wine) and support for running them in VM out of the box like native applications. GUI to manage both

-blah blah blah

The most important thing;

-A small base system that you can install on most anything.

-An installer for less technically inclined (my grandmother should be able to use it to browser the internet or play an old Windows game)

-Ability to manually install for people that want that and to strip it down in the "suckless" way